The following is raccoon | driving | tractor | raccoon driving a tractor | unreal engine. Over-indexing on the "non-hack" part of the prompt did seem to do better. raccoon driving a tractor | trending on artstation | unreal engine It did give them an interesting sense of vibrance and dynamism but at the expense of closely matching the prompt (and added artifacts like weird walls and text). Some other examples are trending on artstation and top of /r/art. The most famous is to append unreal engine or rendered with unreal engine to the end of your prompt. On twitter, there are a few different "hacks" people have found to make your images look more impressive. My favorite AI-generated "Raccoon driving a tractor" output. with the imagenet base turned out pretty neat. I never did get it to mimic the style of the human illustrators but the prompt A portrait mode close-up of Rocky the Raccoon, the cute and lovable main character from Disney Pixar's upcoming film set a farm in the Cars universe, driving a big John Deere tractor. I also tried variations on drawing, sketch, cartoon, stick figure, vector, etc without much luck. Any variation on MS Paint gave me "paintbrush" looking strokes, not the simple style I was hoping for. Those were pretty good but not quite the style I was going for so I tried to guide the style next. I first browsed the top paint.wtf submissions for this prompt to get a feel for what the 3000+ human artists discovered that CLIP responded best to. I experimented with our Raccoon Driving a Tractor prompt from paint.wtf.

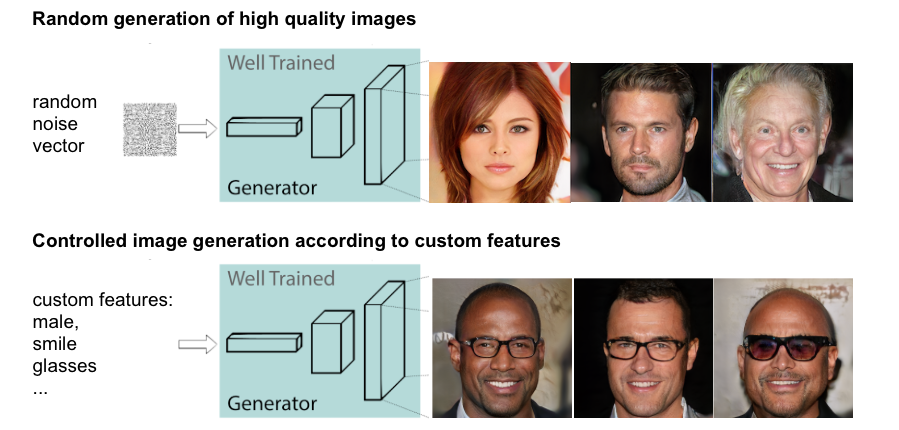

#Face drawing generator algorithm update#

You just update the tunable parameters in the UI, hit "run", and away it goes generating images and progressively steering the outputs towards your target prompts.

I used a Google Colab notebook that makes it really easy to experiment with CLIP+VQGAN in a visual way.

Over 100,000 people have played the game thus far so we have a good idea of how humans perform the drawing task. We affectionately described this project as an "AI Sandwich" because the task and evaluation are both controlled by machine learning models and the "work" of drawing the pictures is done by humans. We previously built a project called paint.wtf which used CLIP as the scoring algorithm behind a Pictionary-esque game (whose prompts were generated by yet another model called GPT). Subscribe to our YouTube for more content like this! A Human-Filled AI Sandwich The intuition is that these GANs have actually learned a lot more from the images they were trained to mimic than than we previously knew the key is finding the information we're looking for deep in their latent space. The magic behind how these attempts work is combining another OpenAI model called CLIP (which we have written about extensively before) with generative adversarial networks (which have been around for a few years) to "steer" them into producing a desired output. Their model hasn't yet been released but it has captured the imagination of a generation of hackers, artists, and AI-enthusiasts who have been experimenting with using the ideas behind it to replicate the results on their own. Earlier this year, OpenAI announced a powerful art-creation model called DALL-E.

0 kommentar(er)

0 kommentar(er)